Automate your life!

With expertise in C++, Python, HTML, and various other languages, I specialize in firmware programming, website development, and custom solutions for any project. Whether it’s developing the GrowRight system’s embedded firmware, creating seamless integrations, or building responsive websites like Macroponics.com and Macroponics.cloud, I have the skills to bring your ideas to life.

From automating processes to designing intuitive user interfaces, I am capable of handling diverse programming challenges. My experience spans embedded systems, cloud solutions, and web development, making me well-equipped to tackle any project you have in mind.

Let me help automate your life and bring your ideas to reality!

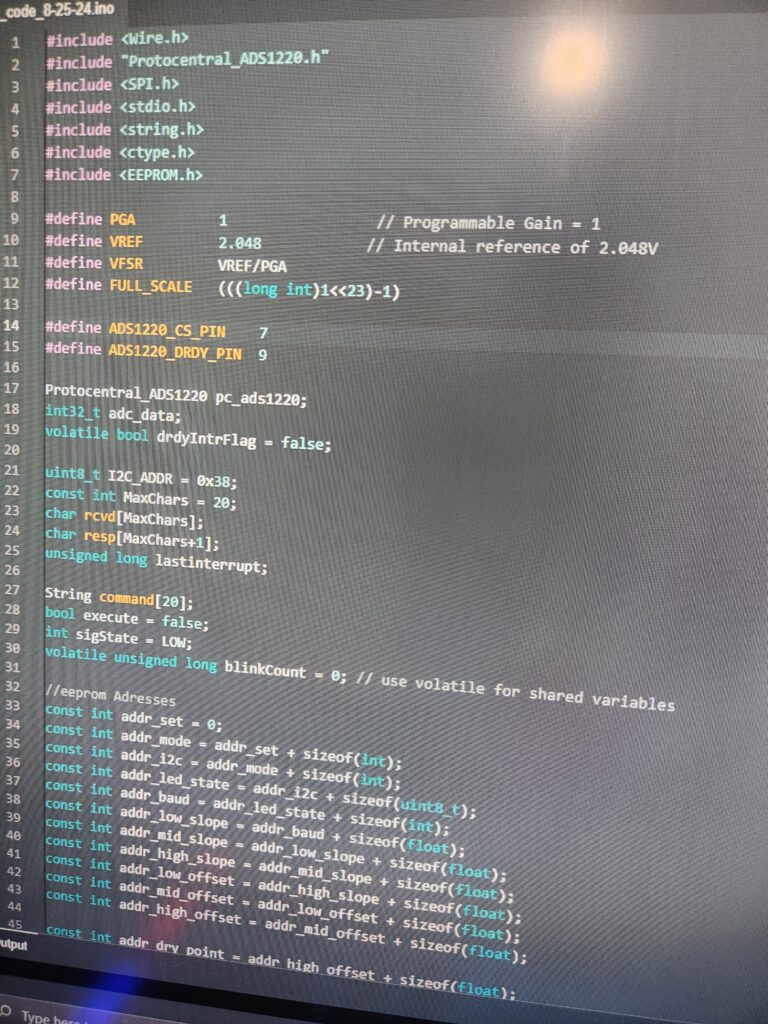

On the left, you’ll find example code from the GrowRight system, showcasing my proficiency in firmware programming and attention to detail in developing embedded solutions.

Below, you can watch a demo video of the custom peristaltic pumps designed specifically for the GrowRight system. From the circuit boards to the firmware and GUI software, every aspect was custom engineered to work seamlessly together, demonstrating my expertise in hardware and software integration.

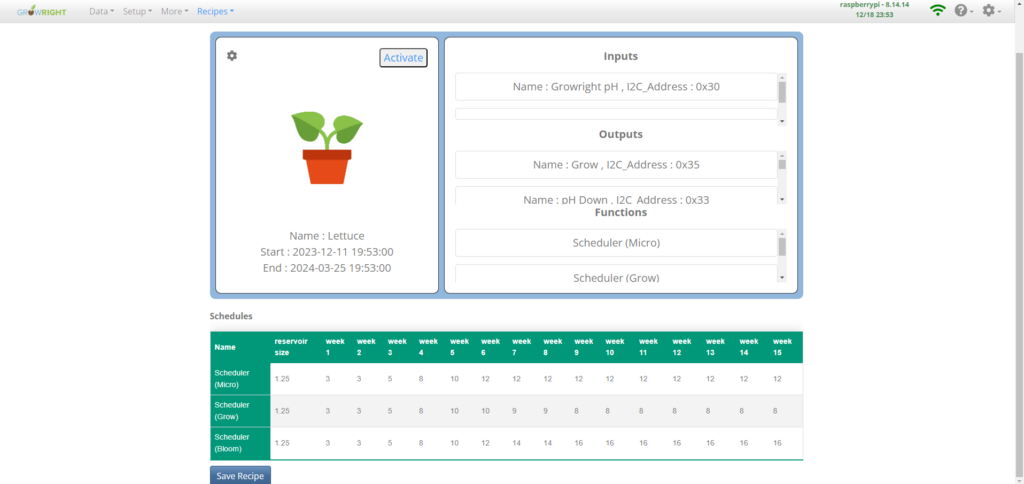

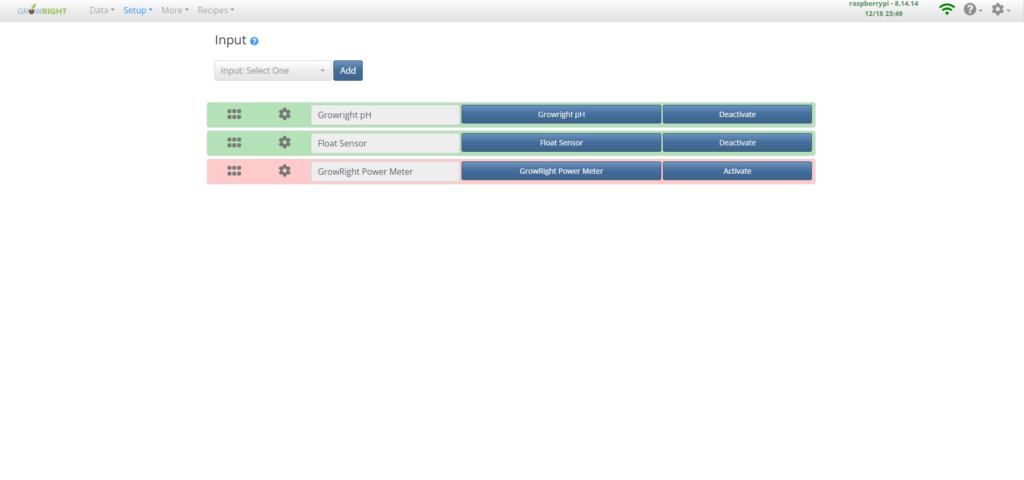

Shown below are screenshots from the web GUI that was created for the GrowRight system.

From my personal journey in developing software for the GrowRight system, where every challenge and triumph is captured in the photographs and screenshots above, I’ve come to realize that the true lessons of programming and cybersecurity are forged through direct experience.

The Ultimate Teacher: Hands-On Experience in Programming and Cybersecurity

While hundreds of tutorials and guides can provide a solid foundation, nothing compares to the lessons learned through direct, hands-on practice. Engaging in activities like pen testing and building your own systems not only reinforces theoretical knowledge but also exposes you to real-world challenges that no tutorial can fully simulate. By actively exploring vulnerabilities, experimenting with code, and confronting the unpredictable nature of cyber threats, you cultivate a deeper, more intuitive understanding of programming and cybersecurity. This experiential approach transforms abstract concepts into tangible skills, making you not just a passive learner, but an active architect of your own expertise.

Tutorials can serve as valuable jumping-off points for anyone new to the field, offering a structured introduction to complex topics. However, as many experienced professionals have found, these resources are just the beginning. This page is dedicated to the discussion of programming, cybersecurity, and related fields, and it represents a collaborative space where experienced practitioners and new learners come together.

By forming a close-knit community of programmers, hackers, cybersecurity professionals, and curious newcomers, we can more effectively share insights, best practices, and cutting-edge techniques. This collective approach not only helps disseminate the most useful information but also fosters an environment where practical experience, real-world problem solving, and continual learning can thrive. Whether you’re just starting out or looking to deepen your expertise, our community stands as a testament to the idea that while tutorials lay the groundwork, hands-on experience and mutual collaboration are the true teachers in the ever-evolving landscape of technology.

Black Hat vs. White Hat Hacking: Understanding the Ethical Divide

In the world of cybersecurity and programming, hacking is often misunderstood. The term “hacker” does not inherently imply criminal activity, it simply refers to someone who deeply understands computer systems and can manipulate them in ways that others may not anticipate. However, the intent behind hacking is what separates ethical hackers from malicious ones.

Black Hat Hacking refers to hacking done with malicious intent. Black hat hackers exploit vulnerabilities in systems for personal gain, whether through stealing data, causing disruption, or bypassing security measures for unethical purposes. Their actions can cause harm, violate privacy, and disrupt essential services.

White Hat Hacking, on the other hand, is the practice of using hacking skills for ethical and constructive purposes. White hat hackers, often known as “ethical hackers,” work to strengthen cybersecurity by identifying vulnerabilities before malicious hackers can exploit them. They follow legal and moral guidelines, ensuring that their work benefits individuals, organizations, and society as a whole.

The power of hacking, like any advanced technology, depends entirely on how it is used. That is why I have dedicated a separate page to an ethical framework for hacking and cybersecurity. This framework provides guidance on how to use this technology responsibly, ensuring that it serves as a force for good rather than destruction. Understanding the ethical responsibilities of programming and hacking is crucial, as these skills have the potential to shape the digital world for better or worse.