Endless Potential

With the power of wordpress, I can create a custom site that will suite any product.

I specialize in creating custom websites tailored to your specific needs. Whether it’s an eCommerce platform like the GrowRight website I built, or a resource hub like the Wikipedia database I set up using Kiwix, I can bring your vision to life. My process involves using reliable technologies like Docker and MariaDB to ensure your website is secure, fast, and easy to manage.

No matter the project, I can design and develop a website that fits your requirements, ensuring a seamless user experience and robust functionality.

See the embedded sites to the right for the websites mentioned.

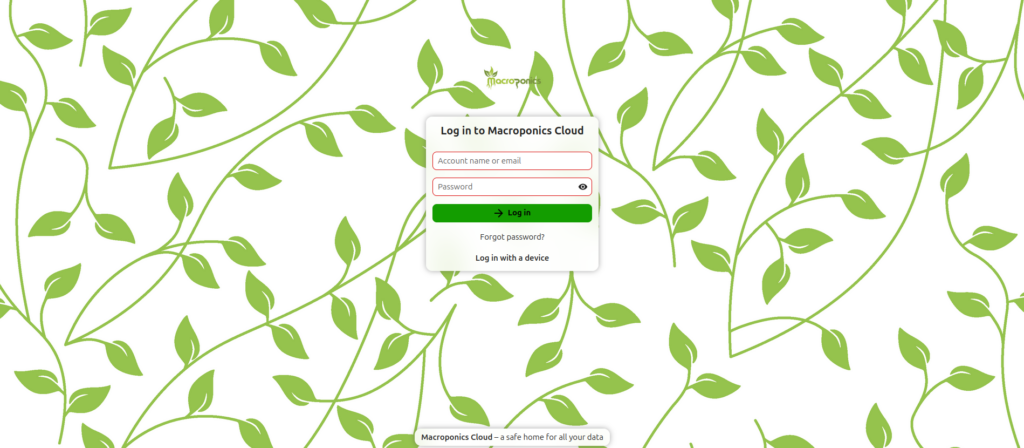

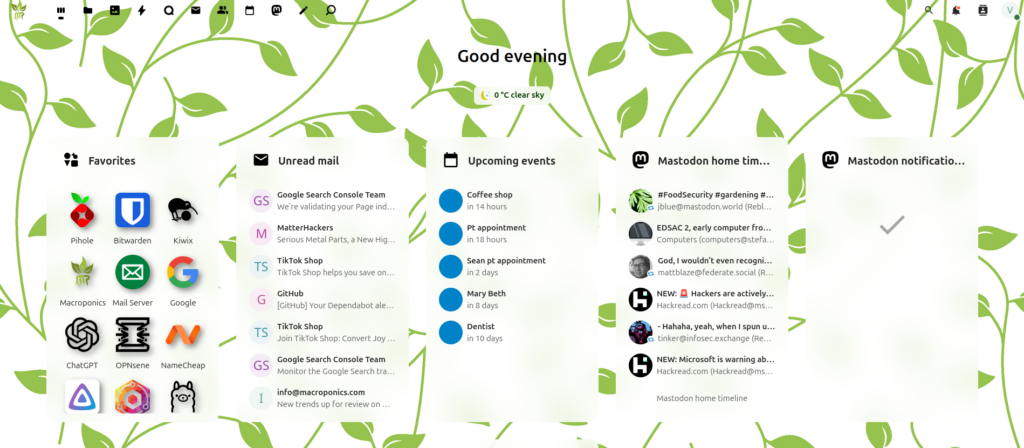

I am offering a custom-hosted Nextcloud service through Macroponics.cloud, providing secure and reliable cloud storage solutions tailored to your needs. With this service, you’ll have your own private cloud where you can store, share, and access files with ease. Additionally, the service comes with a custom email domain and email server hosting, allowing you to manage both your files and communications under a single platform.

The service is available in various pricing tiers based on the amount of storage required, ensuring flexibility for individuals and businesses of all sizes. Whether you need a small personal cloud or an extensive business solution, I can offer a plan that suits your needs.

Explore the service and see how it can benefit you:

Let me help you take control of your data and communications with a reliable, secure cloud solution.

Single Account

Enjoy all the benefits of the Macroponics network

(1 User)

- 20 GB Cloud Storage

- 5 GB Mail Storage

- Hosting service for 1 site

- Access to the Macroponics network

Family Account

Allow the whole family to have an account

(5 Users)

- 50 GB Cloud Storage

- 25 GB Mail Storage

- Hosting Service for 5 sites

- Access to the Macroponics network

Enterprise Account

Take your online business to the next level

(10 Users)

- Unlimited Cloud Storage

- Unlimited Mail Storage

- Hosting services for up to 10 sites

- Access to the Macroponics network